Bias and fairness in speech recognition impact how accurately systems understand diverse voices and accents. If your system lacks representation of various genders, accents, or speech patterns, it may misinterpret or ignore some users, creating barriers. Addressing these issues involves expanding training datasets to include more inclusive voices and correcting biases. By exploring this topic further, you can learn how to make speech technology more equitable and effective for everyone.

Key Takeaways

- Speech recognition systems often perform poorly with diverse accents due to limited, non-representative training datasets.

- Gender biases cause higher accuracy for female voices, especially with higher pitch, leading to unequal user experiences.

- Biases reduce accessibility for marginalized groups and can reinforce societal stereotypes and inequalities.

- Incorporating diverse, inclusive datasets and addressing bias during development improves fairness and system accuracy.

- Improving fairness in speech recognition enhances trust, accessibility, and equitable technology use across all user demographics.

Speech recognition technology has become an integral part of daily life, powering virtual assistants, transcription services, and accessibility tools. However, despite its widespread use, it’s not perfect. One of the major issues you might notice is how accent diversity impacts accuracy. Many speech recognition systems struggle with different accents, which can lead to misinterpretations or errors. If you have an accent that differs from the predominant training data, you might find that your commands aren’t recognized correctly or that transcription quality drops considerably. This happens because most systems are trained on limited datasets that often lack sufficient representation of various accents, leading to a bias where certain speech patterns are favored over others. When these systems aren’t inclusive of accent diversity, it creates a barrier for many users, making the technology less accessible and reinforcing existing inequalities.

Alongside accent bias, gender bias also plays a crucial role in the performance of speech recognition tools. You might notice that virtual assistants tend to respond better to certain voices, often aligning with gender stereotypes. For instance, many systems are more accurate when recognizing female voices, especially those with higher pitch, while male voices sometimes experience more errors. This imbalance stems from the training data, which frequently contains a disproportionate number of samples from one gender, leading to skewed accuracy. Such bias doesn’t just affect user experience; it can also perpetuate societal stereotypes by reinforcing the idea that certain voices or speech patterns are more ‘standard’ or ‘correct.’ When speech recognition systems are biased toward specific genders, users who don’t fit those norms may find the technology less reliable or even frustrating to use.

You might also notice that these biases can lead to real-world consequences, particularly for marginalized groups. If a system consistently misinterprets your speech because of your accent or gender, it diminishes your trust in the technology. It can also limit your access to services that depend on accurate voice recognition, from voice-activated devices to critical accessibility tools. Improving training datasets with diverse voices and accents is essential, as is actively addressing gender biases to ensure everyone benefits equally from speech recognition advances. Additionally, incorporating vetted datasets that include a broad range of speech variations can significantly enhance system fairness and accuracy. Ultimately, making these systems more equitable isn’t just about fairness; it’s about creating technology that truly serves everyone.

ECS WordSentry Hands Free Gooseneck Microphone for Pathology or Radiology Dictation Speech Recognition

Unidirectional 19" adjustable hands free gooseneck conference microphone for pathology or radiology

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

Frequently Asked Questions

How Do Speech Recognition Biases Impact Different Accents?

Speech recognition biases can cause your accent to be misunderstood or misinterpreted, especially if the system lacks exposure to diverse accents. Accent diversity is often limited in training data, leading to inaccuracies. By focusing on algorithm fairness, developers can improve how these systems accommodate different speech patterns, ensuring everyone’s voice is recognized equally. This helps reduce errors and creates a more inclusive experience for users with various accents.

What Are the Ethical Considerations in Addressing Bias?

Imagine peeling back layers of an onion—each reveals a deeper ethical concern. When addressing bias, you must prioritize algorithm transparency and data diversity. You’re responsible for ensuring fair treatment across all users, avoiding harm, and promoting inclusivity. Ethical considerations demand that you scrutinize how data influences outcomes, challenge biases, and advocate for transparency. By doing so, you create a speech recognition system that’s equitable, trustworthy, and truly serves everyone’s needs.

How Can Users Identify Biased Speech Recognition Outputs?

You can identify biased speech recognition outputs by paying attention to inconsistencies or errors that favor certain accents, dialects, or speech patterns. To spot bias, look for a lack of algorithm transparency, which makes it hard to understand how decisions are made. User education is key—learn about how these systems work and their limitations, so you can critically evaluate when outputs seem unfair or biased.

Are There Specific Demographic Groups More Affected by Bias?

You’re more likely to notice that certain demographic groups, such as those with diverse accents, dialects, or speech patterns, face greater challenges with speech recognition. These demographic disparities can lead to inaccuracies and misunderstandings. Cultural sensitivities also play a role, as speech systems may not be equally trained on all languages or cultural expressions. Recognizing these issues helps you advocate for more inclusive technology that respects all voices equally.

What Role Do Policymakers Play in Ensuring Fairness?

Policymakers play a vital role by establishing policy enforcement and creating regulatory frameworks that promote fairness in speech recognition. You can influence this process by advocating for laws that require transparent testing and bias mitigation. Your involvement guarantees companies prioritize equitable technology, hold them accountable, and prevent discrimination. Ultimately, policymakers help shape standards that promote fair access and reduce bias, fostering more inclusive and unbiased speech recognition systems for everyone.

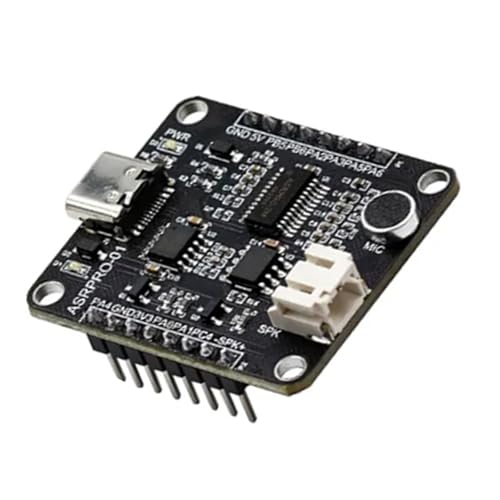

TINGUT Speech Recognition ASRPRO Voice Intelligent Recognition Controls Module with 4MB Flashing Memory for Electronics

Unleash voice with ASRPRO Voice Recognition Controls Module, high performances development board equipped with 4MB Flashing memory for…

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

Conclusion

Addressing bias and fairness in speech recognition isn’t just an option—it’s essential. Imagine a healthcare system where voice assistants misinterpret dialects, limiting access for some communities. By actively working to reduce bias, you help create technology that serves everyone equally. Your efforts can lead to more inclusive voice interfaces, ensuring diverse voices are understood and valued. Ultimately, embracing fairness in speech recognition paves the way for a more equitable and accessible future for all users.

Dictation Depot LLC Express Scribe Transcription Kit – Professional Software, Heavy Duty Infinity IN-USB-3 Foot Pedal, Spectra FLX-10 Headset – 16GB Memory, USB Interface

Software is from a download link provided, No CD needed.

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.

virtual assistant with gender bias correction

As an affiliate, we earn on qualifying purchases.

As an affiliate, we earn on qualifying purchases.